A great way to learn the material is to make modifications. This week, I summarize my experience of creating a pull request to TF-Agents to improve its documentation.

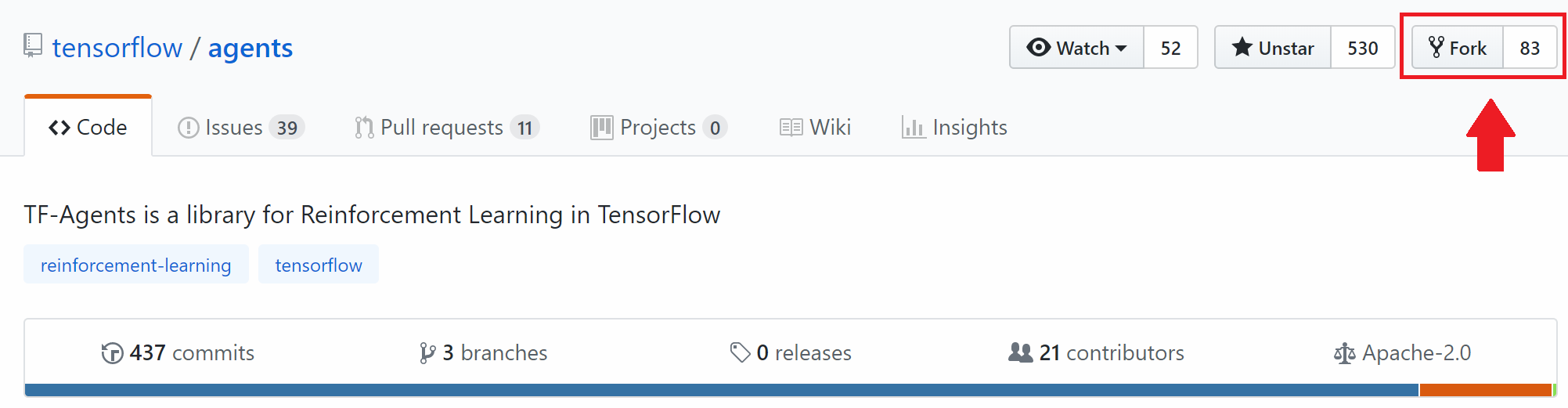

Fork Repository

To make changes to the existing codebase, I first needed to fork the tensorflow/agents repository. This was really easy: I just needed to press “Fork” button and a new repository seungjaeryanlee/agents was created.

Afterwards, I cloned the repository to local.

git clone https://github.com/seungjaeryanlee/agents.git

I already set up my conda environment, so there was nothing else to do!

Improve Documentation

To familiarize myself with the codebase and the workflow, I decided to improve the documentation first. (Inspired by Paige Bailey a.k.a. @DynamicWebPaige)

Want to get started with open-source software, but not with code?

— 👩💻 DynamicWebPaige @ #IO19 🧠✨ (@DynamicWebPaige) February 16, 2019

👩🎨 Design a logo

⁉️ Answer questions on @StackOverflow

📒 Write docs!

👩🏫 Teach a course

📈 Triage issues, or help with project management

❤️ Post on social media

🐛 Submit issues

*All* contributions are valuable. pic.twitter.com/HuaFKWGNAE

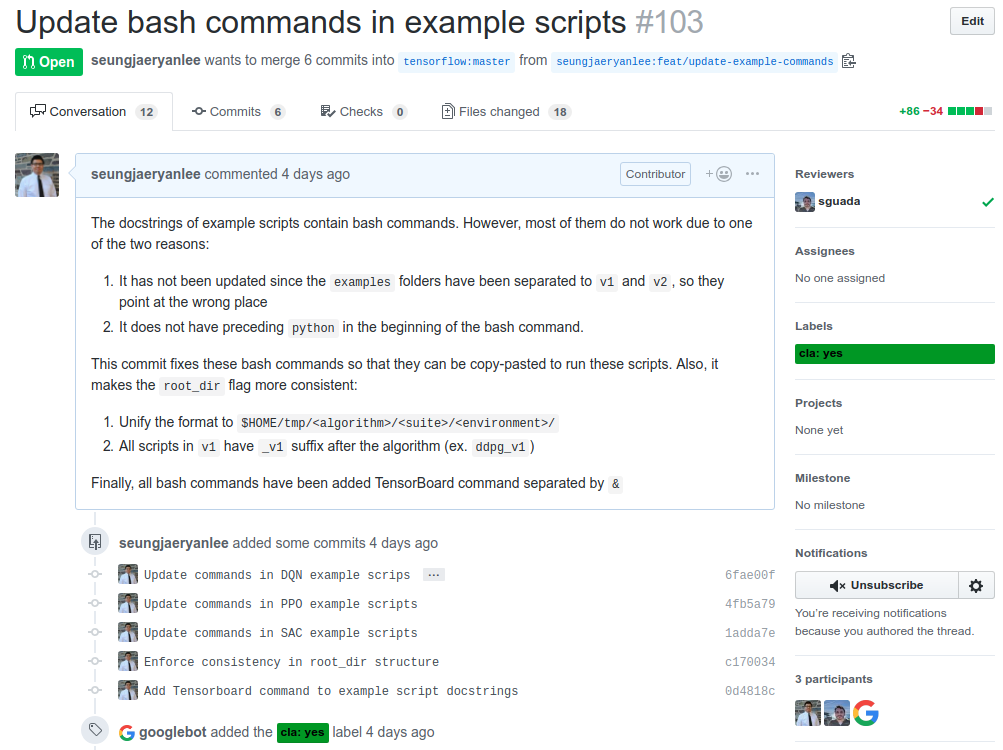

Fortunately, while I was setting up TF-Agents in my last post, I found some outdated documentations that needed to be updated. The example scripts contain bash commands to run them, but they did not work because the directory structure of example scripts changed.

In the forked repository, I made relevant changes and verified that the modified bash commands work. Now, it was time to ask for a review!

Create a Pull Request

In my personal repositories, I tend to get sloppy with Pull Requests, since I am both the writer and the reviewer. However, since the reviewer is not me, I needed to write a pull request that contained the intent of the pull request. To write a good pull request message, I read the GitHub Blog Post and Atlassian Blog Post on writing a good pull request.

In the end, my pull request looked like this: (You can also see it on GitHub PR #103)

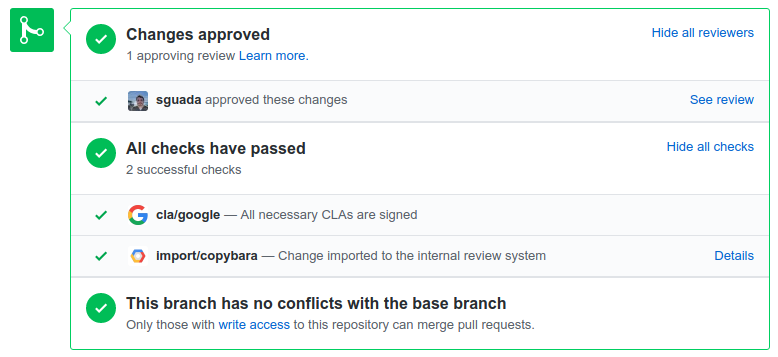

A few days later, Sergio Guadarrama (sguada) reviewed my PR and requested a few minor changes. After those changes were made, he approved my pull request.

Hopefully my pull request gets merged without additional fixes! 🤞

For the first pull request, the author of the PR needs to sign the Contributor License Agreement (CLA). I had created a pull request to TF-agents before (PR #58), so I could skip it this time.

What’s Next?

Creating a pull request for documentation was nice, but there will be a lot more challenges when I start writing code for TF-Agents. Changing some parts of the code could break many things due to dependencies, so I want to become more familiar with the inner structure of TF-Agents.

Meanwhile, I have also been re-reading the research papers that introduced the algorithms that I will implement for my GSoC project. I will be starting with Random Network Distillation (RND), proposed in Exploration by Random Network Distillation by Burda et al (2018). My mentors Oscar Ramirez and Mandar Deshpande also suggested that I should research some good evaluation methods to verify my implementations, so I have been browsing for reinforcement learning environments and existing implementations.