In the last post, we played the game ourselves and got a better understanding of the environment, as well as some insight on how a “good” agent might behave. Today, we will now check how the agent observes and acts inside this environment.

Observation Space

The observation space of the Obstacle Tower consists of two components:

- The visual part

- The auxiliary part

The visual part is a single $168 \times 168$ image. It is exactly twice in hieght and width from the common $84 \times 84$ image found in the Arcade Learning Environment (ALE).

The auxiliary part is a vector that consists of two numbers: the number of keys the agent has, and the amount of time until the episode terminates.

Action Space

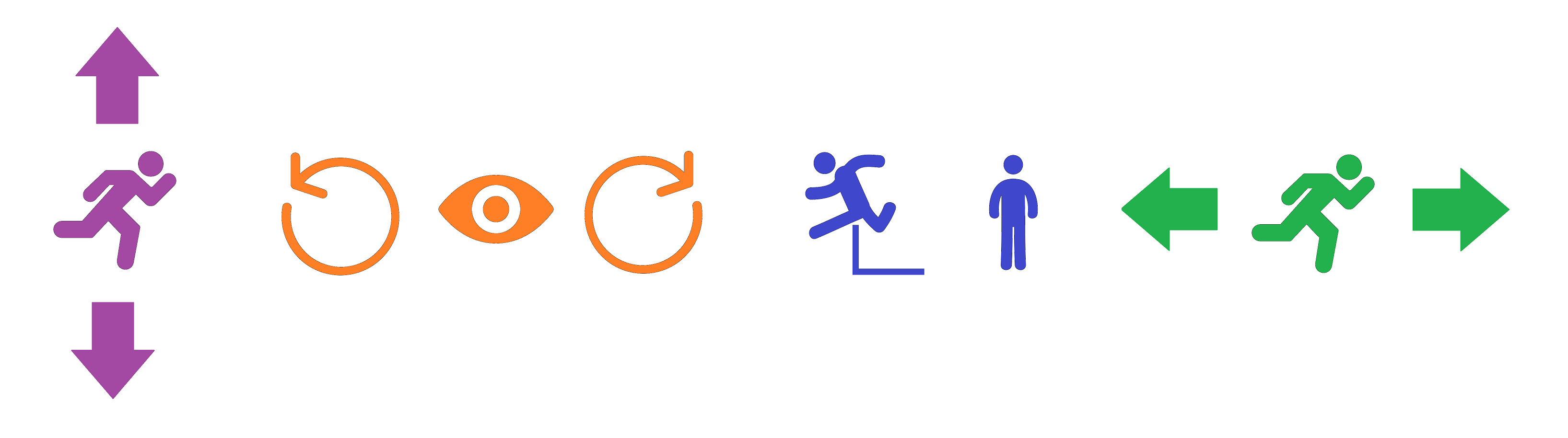

The action space of the Obstacle Tower consists of four “subspaces” (components):

- Moving forward or backward (or no-op)

- Turning camera counterclockwise or clockwise (or no-op)

- Jumping (or no-op)

- Moving left or right (or no-op)

These subactions from subspaces can be combined in any way: for example, you can turn camera clockwise while jumping left. In total, there are $3 \times 3 \times 2 \times 3 = 54$ combinations available.

Note that “forward”, “backward”, “left”, “right” are all relative to the player’s camera.

For convenience, here is a simple Action Interpreter widget, with style borrowed from crontab.guru:

Do nothing.

NOTE There was a bug in previous version of action interpreter: camera clockwise and camera counterclockwise was reversed. This was fixed on April 11th.

Retro Mode

These observation space and action space are quite different from the Arcade Learning Environment (ALE). To allow making a fair comparison, the environment also offers a “retro mode” where both the observation space and the action space is retro-fitted to be as similar as the ALE environments.

Instead of the two-component observation space, the “retro” observation space only returns an $84 \times 84$ image as observation. Instead of an auxiliary vector, it embeds the information into the image.

Instead of the four-component action space, the “retro” action space accepts a number from 0~53, resembling the 54 combinations of actions available.

You can activate the retro mode when you initialize the environment by giving retro=True as a parameter:

env = ObstacleTowerEnv('./ObstacleTower/obstacletower', retro=True)

What’s Next?

Now that we know what the agent will see and how the agent can act, we are ready to start training some baseline agents! A baseline agent can offer valuable insight on what possible improvements can be made. For example, if the baseline agent struggles in distinguishing objects, we might want a larger convolutional neural network. If the baseline agent cannot solve 2D puzzles, we might want to add some planning module to the agent.

Following the Obstacle Tower paper, we will use two popular baselines: Rainbow and PPO.