Dear readers,

We have reached 800 subscribers! Thank you so much for subscribing to RL Weekly and sharing it with your friends. I will try my best to provide you interesting news in reinforcement learning. Anyways, here is the 36th issue!

As always, please feel free to email me or leave any feedback. Your input is always appreciated.

- Ryan

Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model

What it says

RL can be divided into Model-Based RL (MBRL) and Model-Free RL (MFRL). Model-based RL uses an environment model for planning, whereas model-free RL learns the optimal policy directly from interactions. Model-based RL has achieved superhuman level of performance in Chess, Go, and Shogi, where the model is given and the game requires sophisticated lookahead. However, model-free RL performs better in environments with high-dimensional observations where the model must be learned.

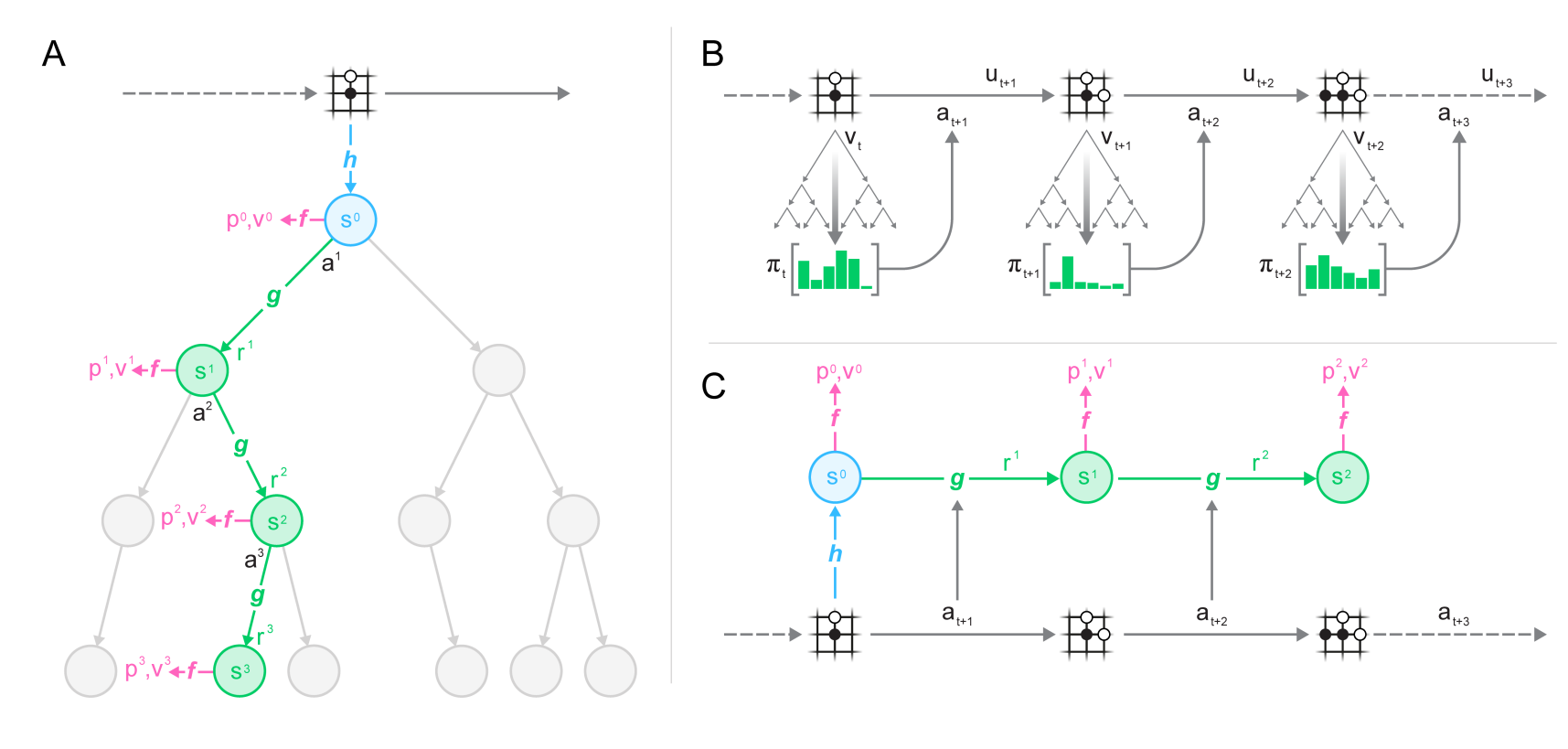

The representation function receives past observations from a selected trajectory as input and returns an initial hidden state. The dynamics function of MuZero is a recurrent process, where given the previous hidden state and a hypothetical action, it returns the predicted reward and the next hidden state. This hidden state is used by the prediction network to predict the value function and the policy, similar to AlphaZero. A generalized version of MCTS is used for planning and action selection. The representation function, dynamics function, and the prediction function are all trained jointly by backpropagation through time (Appendix G).

Unlike AlphaZero, MuZero uses its learned dynamics model without given knowledge of the game. The algorithm searches through invalid actions as well as valid actions, and does not discern terminal node (Appendix A). Yet, MuZero matches or exceeds the performance of AlphaZero in Chess, Shogi, and Go, despite not being given a model. For each board game, MuZero was trained for 12 hours on 16 TPUs for training and 1000 TPUs for self-play (Appendix G).

MuZero also sets a new state-of-the-art performance in Atari games. With 20 billion frames, it exceeds the performance of R2D2 trained with 37.5 billion frames. Its sample-efficient variant named “MuZero Reanalyze” that reanalyze old trajectories also achieve performance better than IMPALA, Rainbow, UNREAL, and LASER while only using 200 million frames to match the number of frames used (Appendix H). The training took 12 hours with 8 TPUs for training and 32 TPUs for self-play (Appendix G).

Read more

External resources

- AlphaZero (arXiv Preprint)

- r/machinelearning Subreddit Post

- r/reinforcementlearning Subreddit Post

- HackerNews Story

Safety Gym

What it says

In environments outside of simulators, the behavior of RL agents could have substantial consequences. Yet, as RL agents require trial and error to learn, the “safe exploration” problem naturally follows. The authors define safe RL as “constrained RL.” In constrained RL, every policy is assigned a constrained cost, and the optimal policy must have a constrained cost below some threshold. The validity of this approach can be inferred from its similarity to cumulative reward and the reward hypothesis that state that a scalar reward can describe the goal of an agent.

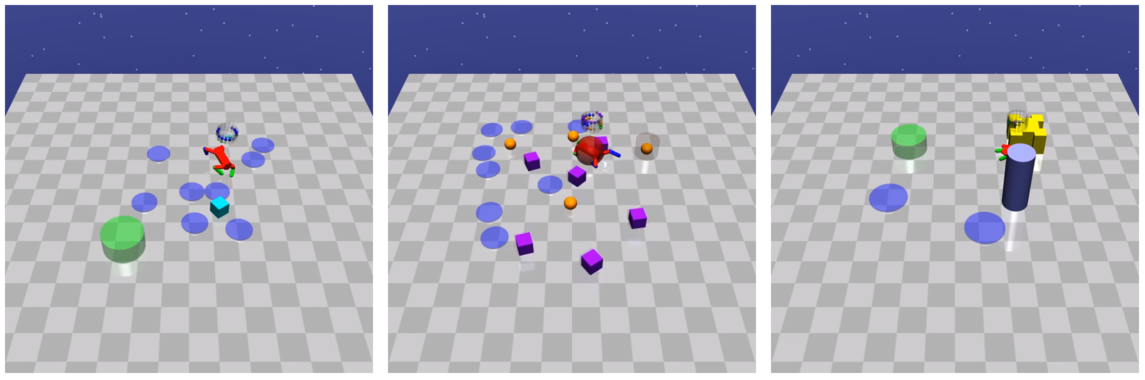

The authors also provide Safety Gym, a set of 18 environments that vary in difficulty. They are robot navigation environments, with 3 types of robots, 3 different tasks, and 2 difficulty settings. The authors test several baselines such as TRPO, PPO, their Lagrangian penalized versions, and Constrained Policy Optimization (CPO).

Read more

Here are some more exciting news in RL:

IKEA Furniture Assembly Environment for Long-Horizon Complex Manipulation Tasks

The IKEA Furniture Assembly Environment is an environment that simulates furniture assembly of a Baxter and Sawyer robot.

Data-efficient Co-Adaptation of Morphology and Behaviour with Deep Reinforcement Learning

To imitate how humans and animals evolved, we co-adapt robot morphology and its controller in a data-efficient manner.

Voyage Deepdrive

Deepdrive is an self-driving car simulator that supports reinforcement learning agents.

Third-Person Visual Imitation Learning via Decoupled Hierarchical Controller

Learn by looking at a demonstration from a third-person perspective through a hierarchical structure of high-level goal generator and low-level controller.